Isolation, Perception, and Communication

> We are missing the most critical scaling axis

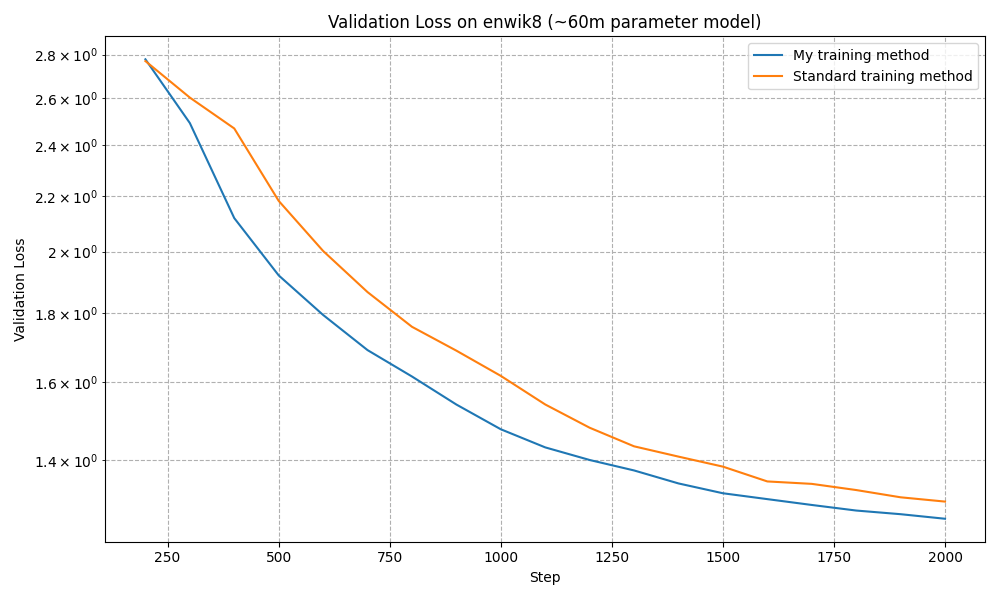

Ilya Sutskever referenced the above image in his recent talk at NeurIPS. Why? He hinted at an additional axis of scaling available to the models. We have train time scaling, and inference time scaling. I strongly believe that the third axis of scaling is heterogenous social training. In its truest sense, the third axis of scaling is raw search in the most pure form. Let’s first look at our only currently available existence proof of AGI, human intelligence. How did this materialise?

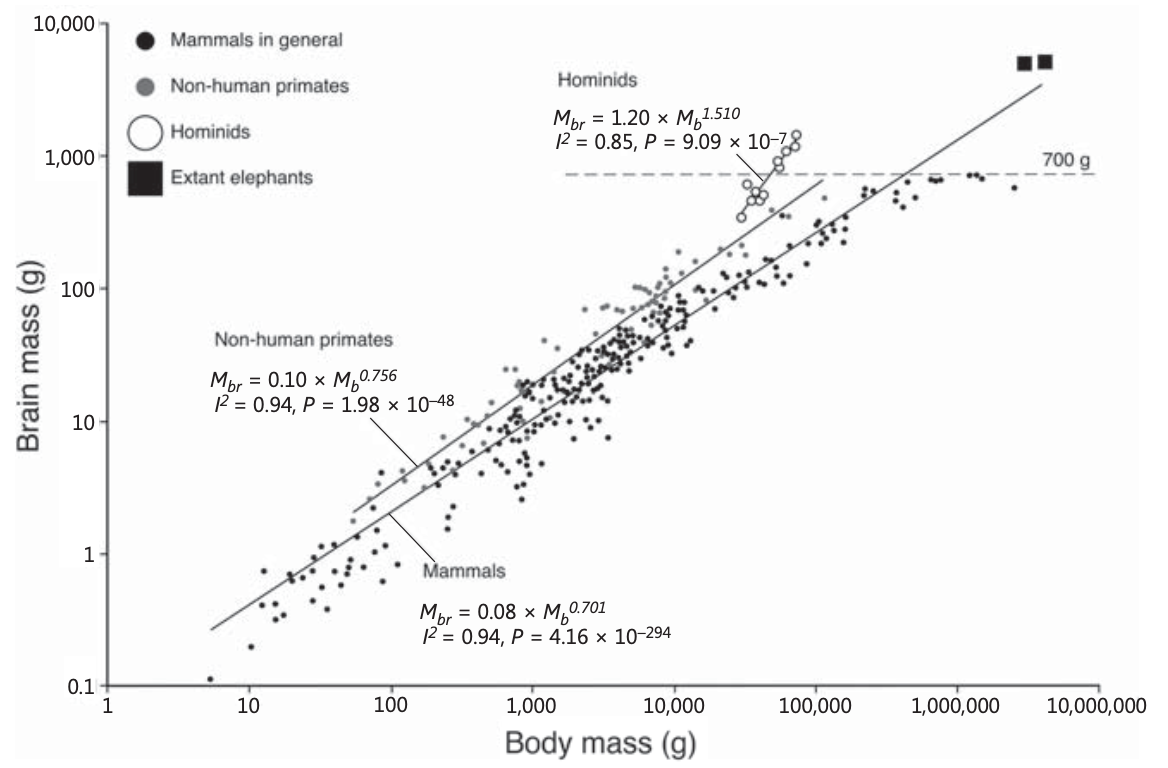

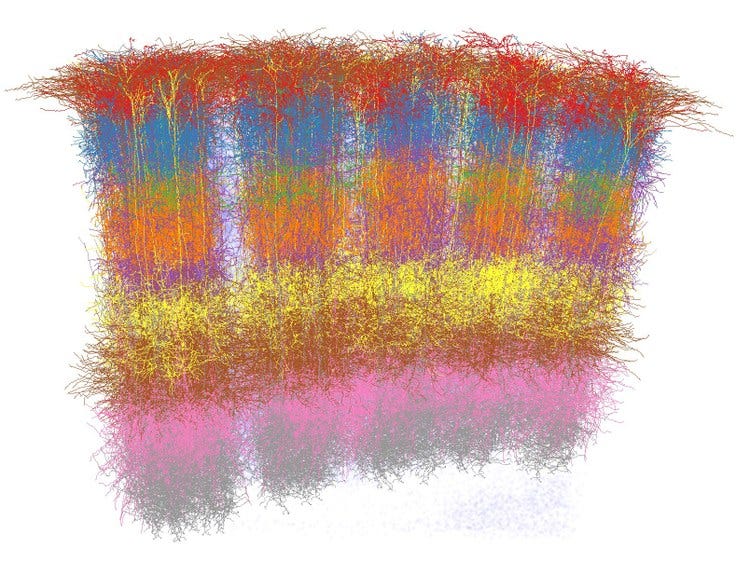

The ultimate unlock discovered by pure genetic evolution was the neocortex. The structure of the neocortex contains a very homologous set of cortical columns of neurons which allowed evolution to rapidly scale the number of these cortical columns in parallel. Why is the neocortex advantageous, why does it work so well? It’s hypothesized that the highly parallel and homologous structure of these columns allows each cortical column to build its own model of the environment. A large proportion of the columns receive the same sensory input, often however from a slightly different angle or sensory organ. In this way, the cortical columns can be seen as a population of highly specialized individuals that come to a consensus on identifying an object, threat, or whatever is relevant to the organism at the time.

The transformer looks shockingly similar to the neocortex. Let’s look at the transformer this way; at its core, we have a stack of transformer blocks, each containing multi-headed self attention, followed by the MLP networks.

Above we have the cortical columns found in the neocortex. At each layer we observe the same thing, we have lateral neuronal communication (self attention), followed by MLP networks. Each token within the context of a transformer can be seen as representing a cortical column. This is why scaling the context length of LLMs is so unreasonably effective at eliciting meta-learning/ICL.

So how did hominids take advantage of this structure, quickly scaling the width of the neocortex? They developed verbose language as a new substrate of symbolic perception independent of the environment. I believe the purpose of communication and language is less to do with communicating ideas, threats, or warnings, but at its most fundamental level to program each other’s brains at an evolutionary pace totally unavailable to lower level biology and genetic mutation. Language is program search. Language is disembodied intelligence. The rapid growth of the neocortex in hominids is to accommodate a growing database of programs of thought, acquired from diverse individuals in the population, persisting across generations.

> We should be scaling heterogeneity and recurrence, not depth

Instead of building deeper and deeper models, we should be scaling the models and number of models laterally and much more sparsely like the cortical columns, inducing the complex and deep thought via recurrence. Importantly, we want networks to be isolated from each other, perceiving and understanding separately, before communicating their language of thought.

> Levels of intelligent abstraction

In our brain, the neuron is self-serving and greedy. It integrates only local information and wants to be as energy efficient as possible. Why? If a neuron can predict when it should fire to both minimize its own activation activity as well as cause other neurons to fire most effectively, it can avail of the energy supplied to those other neurons via blood flow. Stochastic activity in the brain is enough to motivate learning within it.

The introduction of verbose language allowed humans to emerge as the next level of abstraction, operating in a similar manner of greedy social and market economic behaviour. Both abstractions involve isolation, followed by perception, and finally communication. We need to introduce this next level of abstraction to machine learning.

> An experiment on heterogeneous social training

I have developed an initial scaling methodology involving genetically evolving models from scratch, communicating and training on each other’s thoughts. In evaluations on enwik8 and enwik9, I find greatly improved data efficiency – while introducing zero additional data whatsoever. Both models were trained from scratch. I find that the performance gap grows across both model scale and number, and holds across architectural changes.